A mouse’s view of the world, seen through its whiskers

Berkeley neuroscientists reconstruct mouse's spatial map of the world around its head

June 27, 2017

Mice, unlike cats and dogs, are able to move their whiskers to map out their surroundings, much as humans use their fingers to build a 3D picture of a darkened room.

UC Berkeley researchers have for the first time reconstructed the whisker map a mouse creates of its surroundings in order to navigate its world, catch insects and avoid cats.

These are not the typical brain maps that show which brain cells are activated when a particular whisker is tweaked, or, in humans, the so-called homunculus map in the human cortex of touch receptors on the skin.

“It’s not a body map but a spatial map of the area around the animal’s head that is being scanned by the whiskers: a totally new thing that has not been shown before in any species,” said Hillel Adesnik, a UC Berkeley assistant professor of molecular and cell biology.

Humans may also have sensory maps of the outside world encoded in the neurons of the brain’s cerebral cortex, Adesnik said — not only touch maps, but also maps of what we see and hear, and perhaps our other senses.

Adesnik and his colleagues published their results in the current issue of the journal Neuron, now available online.

Whisking behavior

Researchers study rodent whiskers because the sensory nerves at the base of each whisker connect to well-defined structures in the cortex or outer layer of the brain – so-called barrel columns, because they’re shaped like a barrel. Each of the mouse’s 24 large facial whiskers has an associated barrel column that’s activated when the whisker encounters an object. The entire barrel cortex is between 2.5 and 3 millimeters across.

Neuroscientists have thoroughly mapped these columns over the past decades, and continue to study them to discover how brain circuits process, store and use sensory information.

Adesnik, however, was more interested in how the brain actually uses whisker information to construct a picture of the world around the head.

“Beyond the reach of the whiskers, rodents use vision as well as auditory and olfactory cues to explore the world, but because their near vision is very blurry, close to the face rodents use touch,” he said. “With their whiskers they scan the world the way we would scan with our hand at night on the night table to look for our cellphone.”

The question he asked was, “How does an animal represent space in its cortex so it can localize and then identify an object so that it can execute an action, like picking up the phone, or, for rodents, identifying something it wants to eat?”

The answer lies in a layer of cells in the outermost thickness of the mouse’s somatosensory cortex. While the barrel cells in the preceding cortical layer, layer 4, are patterned according to the whiskers, neurons in layers 2 and 3 receive input from several barrel cells at once and process this information. Layer 4 is about 400 microns deep, while layers 2 and 3 start at about 100 microns deep from the cortical surface. The outermost layer of the cortex, layer 1, can range between 100 and 150 microns thick.

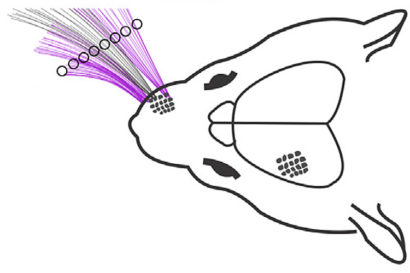

As an object (black circle) was moved within whisking range of the whiskers, researchers recorded neuron activity from the barrel cortex of the brain.

“The hypothesis was that layers 2 and 3, which are one step away from layer 4, are not just encoding whiskers; maybe they are encoding space, that is, the space the whiskers are currently scanning,” Adesnik said. “You can think of it as the mouse’s field of view as it’s moving its whiskers. Maybe there is a map that represents that space, and not the whiskers.”

Using two-photon calcium imaging, which allows more precise localization of fluorescently tagged nerve cells deeper inside brain tissue, Adesnik was able to map how the cells in layers 2 and 3 respond when the mice whisked their whiskers and encountered objects near their head. In most prior studies, mice were sedated or anesthetized and experimenters moved one whisker at at time. In this study, the animals were awake and moved their whiskers into objects while running on a treadmill.

His recordings of cell activation through a window in the skull revealed a smooth map of the physical space the mouse was exploring with its whiskers, not a map of specific whisker activity.

“We think that in layer 4, the neurons really care about whiskers, but not the scanned space,” he said, “while in 2 and 3 they are integrating over whiskers, perhaps even performing a mathematical operation something like smoothing over multiple whiskers, that encodes the absolute horizontal location of the object in the reference frame of the animal’s head.”

The map changes continually as the animal moves its head.

Neurons in layers 2 and 3 of the somatosensory cortex show a preference for the position of an object relative to the mouse’s head (left), which constitutes a map of the space within whisking range. When all but one of the whiskers is trimmed, the neuron responses are scattered all over this layer of the cortex, showing that the layer requires input from many whiskers to map the world around the head.

The findings suggest that humans may also have a smooth touch map of the space around us in the somatosensory cortex; not only a finger representation, but a representation of where the hand is currently scanning, he said.

Adesnik and his lab colleagues are now looking at higher areas of the cortex, which likely do more information processing to identify objects such as prey and connect with motor neurons to trigger a pounce.

Adesnik’s co-authors on the paper are postdoctoral fellow Scott Pluta and graduate students Evan Lyall, Greg Telian and Elena Ryapolova-Webb. The work was supported by the New York Stem Cell Foundation, the Whitehall Foundation and the National Institute of Neurological Diseases and Stroke of the National Institutes of Health.

RELATED INFORMATION