Berkeley experts on how to build more secure, faster AI systems

Computer scientists warn of new kinds of attacks, limits to data analysis and other AI systems challenges, but propose new research directions to realize AI's potential.

October 16, 2017

In a new report released today by UC Berkeley’s Real-Time Intelligent Secure Execution Lab (RISELab), leading researchers outline challenges in systems, security and architecture that may impede the progress of Artificial Intelligence, and propose new research directions to address them.

At UC Berkeley’s RISELab, researchers develop technologies that enable applications to make low-latency decisions on live data with strong security.

“To realize the potential of AI as a positive force in our society, there are huge challenges to overcome, and many of these challenges are related to systems, security and computer architecture, so it is critical to understand and address these challenges,” said Ion Stoica, the director of the RISELab. “This report makes a first step in this direction, by discussing these challenges and proposing new research directions to address them.”

AI applications have begun to enter the commercial mainstream during the past decade. The number and type of such applications is increasing rapidly, driven by the refinement of machine-learning algorithms combined with massive amounts of data, rapidly improving computer hardware and increased access to these technologies. These advances have enabled AI systems to play a key role in applications such as web search, high-speed trading and commerce, and to combine with other technology trends — such as the Internet of Things, augmented reality, biotechnology and autonomous vehicles — to foster new industries. Soon, AI systems are expected to make more and more decisions on humans’ behalf, including decisions that can impact lives and the economy in areas such as medical diagnosis and treatment, robotic surgery, self-driving transportation, financial services, defense and more. The authors hope that this report “… will inspire new research that can advance AI and make it more capable, understandable, secure and reliable.”

According to the report, a key challenge facing AI applications is security. Many companies deploy their AI applications in the public cloud on servers they don’t control, servers that might be shared with malicious players or even direct competitors.

“Two possible approaches to keeping AI systems secure are to leverage secure multiparty computation to handle sensitive data in encrypted form or hardware enclaves where critical parts of AI systems may be kept in a secure execution environment away from other code,” said Raluca Ada Popa, a security expert and a member of the RISELab.

As AI systems learn and adapt based on the data they receive, they are susceptible to new types of attacks, such as adversarial learning. Adversarial learning occurs when an adversary manipulates a feature so that it is misclassified by the AI system. For example, an adversary might cause an autonomous vehicle to interpret a stop sign as a yield sign.

“Building systems that are robust against adversarial inputs both during training and prediction will likely require the design of new machine-learning models and network architectures,” said Dawn Song, an expert on AI and security and a RISELab member.

Other security challenges outlined in the report include developing personalized AI systems (such as a virtual assistant) without compromising the user’s privacy, allowing organizations to securely share data to solve mutually beneficial problems (such as banks sharing data on fraudulent transactions to more quickly catch emerging fraud) and developing domain-specific AI applications that operate under tight time constraints (such as robotic surgery or self-driving cars).

Another challenge emphasized by the report is handling the exponential growth of data generated by the ever increasing number of devices and sensors, as Moore’s Law — which governed the rapid growth of computation power and storage for the past 40 years — is slowing down.

According to David Patterson, a renowned computer architect and RISELab member, “A promising way to address this challenge is to design secure, AI-specific computers that are optimized for the task they are performing. For example, Google’s Tensor Processing unit can be 15 to 30 times faster than a typical CPU or GPU, and use 30-80 times less energy.”

Many future AI applications are expected to be deployed in dynamic environments that change continually and unexpectedly. This raises significant challenges, as AI systems need to be able to react rapidly to these changes by continually interacting with the environment, and they need to be prepared for a range of possible contingencies.

“A promising framework for addressing such challenges is reinforcement learning. However to make reinforcement learning widely applicable for practical problems we need to develop new systems that address a range of scalability challenges,” said Michael Jordan, a pioneer in machine learning and AI, and a RISELab member.

A related challenge in the context of interacting with real-world environments is that AI systems might not be able to conduct a sufficiently large number of experiments from which to learn. To address this challenge, the report favors an approach in which an AI system continually keeps a simulator in sync with the real world so it can use the simulator to predict the results of its action in the real world.

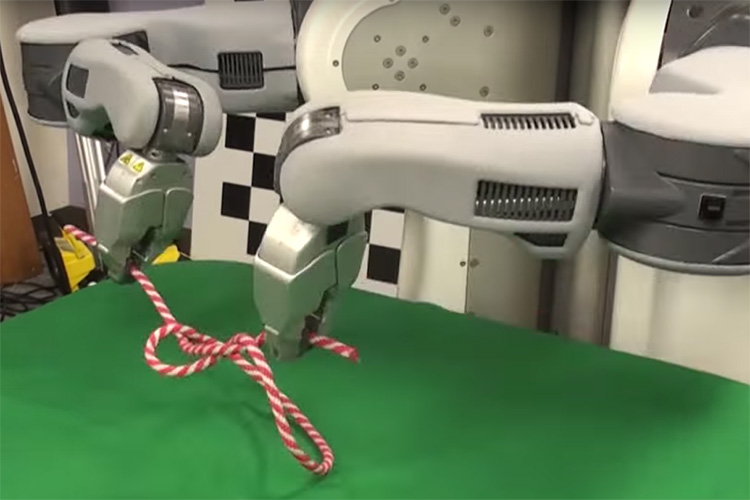

“For example, it may be possible to realistically simulate grasping objects millions of times to learn grasping policies that are robust to errors in sensing and control,” said Ken Goldberg who is a member of the RISELab. [Related: Watch the most nimble fingered robot].

Another challenge for AI is to maintain control over errors in decision-making, as these errors can literally mean life or death in some applications. Perfectly labeled and accurate datasets are the ideal, but real-life datasets used in the training of AI systems are much noisier and have biases. One approach for creating more reliable systems is to better track data sources.

“By building fine-grained provenance support into AI systems to connect outcome changes to the data sources that caused these changes would allow applications to automatically infer the reliability of data sources, and in the process build more robust systems,” said assistant professor of electric engineering and computer science Joseph Gonzalez, a faculty member in the RISELab.