Lost your keys? Your cat? The brain can rapidly mobilize a search party

A contact lens on the bathroom floor, an escaped hamster in the backyard, a car key in a bed of gravel: How are we able to focus so sharply to find that proverbial needle in a haystack? UC Berkeley scientists have discovered that when we embark on a targeted search, various visual and non-visual regions of the brain mobilize to track down a person, animal or thing.

April 21, 2013

A contact lens on the bathroom floor, an escaped hamster in the backyard, a car key in a bed of gravel: How are we able to focus so sharply to find that proverbial needle in a haystack? Scientists at the University of California, Berkeley, have discovered that when we embark on a targeted search, various visual and non-visual regions of the brain mobilize to track down a person, animal or thing.

Our brain is wired so we can focus sharply on searching – and finding – lost pets, among other things

That means that if we’re looking for a youngster lost in a crowd, the brain areas usually dedicated to recognizing other objects such as animals, or even the areas governing abstract thought, shift their focus and join the search party. Thus, the brain rapidly switches into a highly focused child-finder, and redirects resources it uses for other mental tasks.

“Our results show that our brains are much more dynamic than previously thought, rapidly reallocating resources based on behavioral demands, and optimizing our performance by increasing the precision with which we can perform relevant tasks,” said Tolga Cukur, a postdoctoral researcher in neuroscience at UC Berkeley and lead author of the study published today (Sunday April 21) in the journal Nature Neuroscience.

“As you plan your day at work, for example, more of the brain is devoted to processing time, tasks, goals and rewards, and as you search for your cat, more of the brain becomes involved in recognition of animals,” he added.

The findings help explain why we find it difficult to concentrate on more than one task at a time. The results also shed light on how people are able to shift their attention to challenging tasks, and may provide greater insight into neurobehavioral and attention deficit disorders such as ADHD.

These results were obtained in studies that used functional Magnetic Resonance Imaging (fMRI) to record the brain activity of study participants as they searched for people or vehicles in movie clips. In one experiment, participants held down a button whenever a person appeared in the movie. In another, they did the same with vehicles.

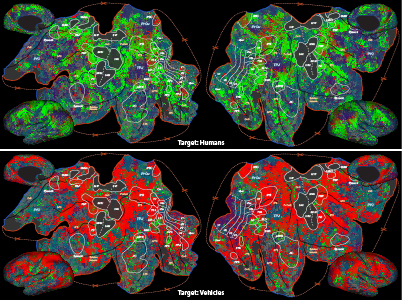

The brain scans simultaneously measured neural activity via blood flow in thousands of locations across the brain. Researchers used regularized linear regression analysis, which finds correlations in data, to build models showing how each of the roughly 50,000 locations near the cortex responded to each of the 935 categories of objects and actions seen in the movie clips. Next, they compared how much of the cortex was devoted to detecting humans or vehicles depending on whether or not each of those categories was the search target.

When searching for a human, more parts of the brain are engaged (represented by the color green in the top image). The red color in the bottom image represents the brain’s attention shifting in a search for vehicles.

They found that when participants searched for humans, relatively more of the cortex was devoted to humans, and when they searched for vehicles, more of the cortex was devoted to vehicles. For example, areas that were normally involved in recognizing specific visual categories such as plants or buildings switched to become attuned to humans or vehicles, vastly expanding the area of the brain engaged in the search.

“These changes occur across many brain regions, not only those devoted to vision. In fact, the largest changes are seen in the prefrontal cortex, which is usually thought to be involved in abstract thought, long-term planning, and other complex mental tasks,” Cukur said.

The findings build on an earlier UC Berkeley brain imaging study that showed how the brain organizes thousands of animate and inanimate objects into what researchers call a “continuous semantic space.” Those findings challenged previous assumptions that every visual category is represented in a separate region of the visual cortex. Instead, researchers found that categories are actually represented in highly organized, continuous maps.

The latest study goes further to show how the brain’s semantic space is warped during a visual search, depending on the search target. Researchers have posted their results in an interactive, online brain viewer. Other co-authors of the study are UC Berkeley neuroscientists Jack Gallant, Alexander Huth and Shinji Nishimoto. Funding for the research was provided by the National Eye Institute of the National Institutes of Health.