How UC Berkeley researchers are making online spaces safer for all

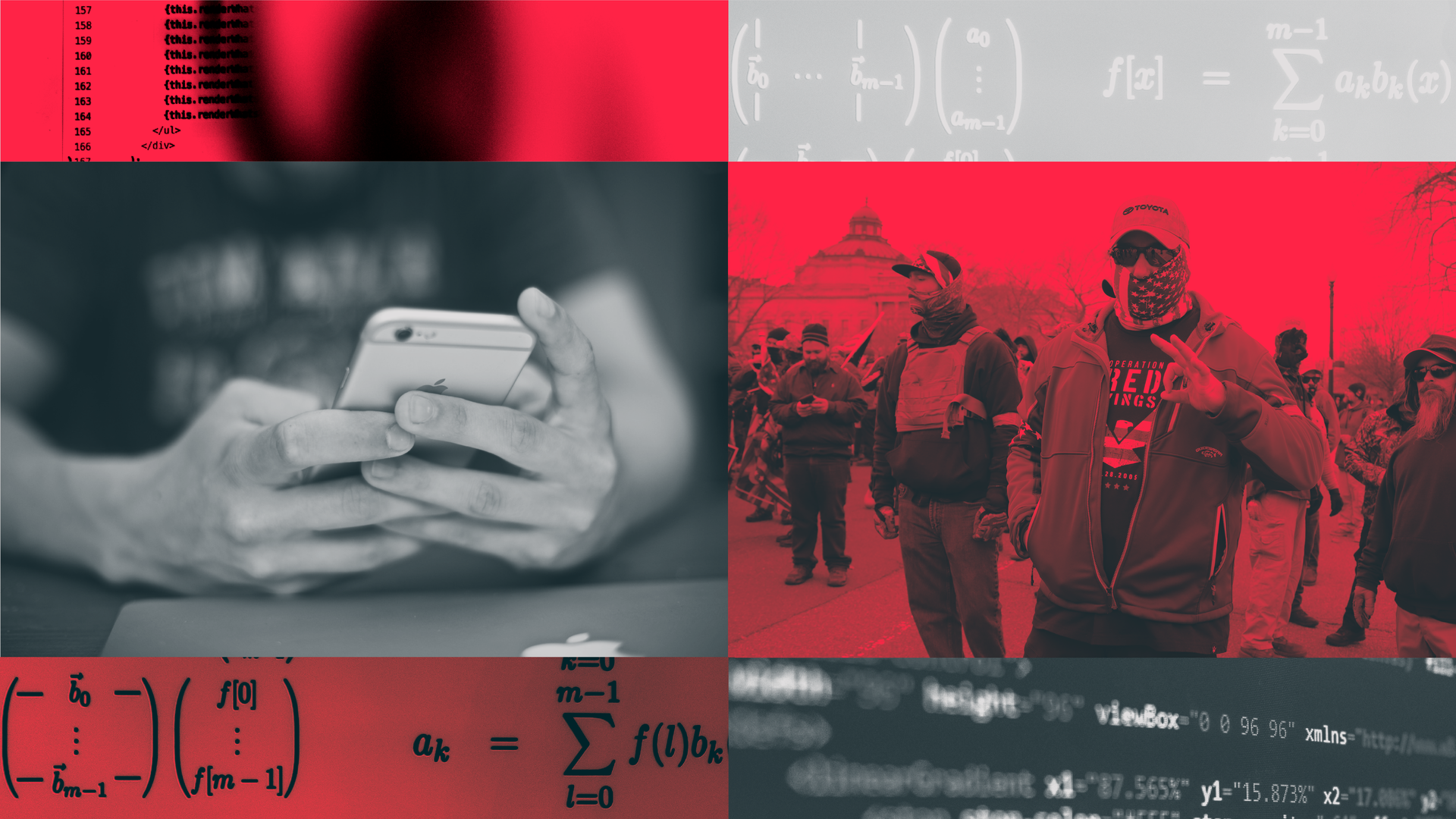

Across campus, researchers are building tools to support freedom of expression on the internet while minimizing the potential for harm.

Brittany Hosea-Small for UC Berkeley

September 30, 2024

Despite our society’s collective addiction to scrolling through social media, many of us can’t help but feel a twinge of dread when seeing notifications we’ve missed. For every clever meme, scintillating fact or adorable animal that crosses our feeds, we’re just as likely to encounter a snarky attack, racial slur or hate-filled comment.

But the potential dangers go far beyond anxiety. A 2021 Pew Research poll found that a quarter of Americans have experienced severe forms of harassment online, including physical threats, stalking, sexual harassment and sustained harassment, often tied to their political beliefs. And this does not include the additional harms caused by hate speech, misinformation and disinformation, which are even harder to measure.

While many social media companies are developing community guidelines and investing in both human and algorithmic content moderators to help uphold them, these efforts hardly feel like enough to stem the tide of toxicity. And even when platforms succeed in removing problematic content and banning perpetrators, people who are the target of online hate or harassment remain extremely vulnerable. After all, it only takes a few clicks for a banned user to create a new account or repost removed content.

At UC Berkeley, researchers are reimagining how to support freedom of expression online while minimizing the potential for harm. By holding social media companies accountable, building tools to combat online hate speech, and uplifting the survivors of online abuse, they are working to create safer and more welcoming online spaces for everyone.

“There are a lot of exciting things about social media that, I think, make it irresistible. We can have real- time collaboration, we can share our accomplishments, we can share our dreams, our stories and our ideas. This type of crowdsourced expertise has real value,” said Claudia von Vacano, executive director of Berkeley’s D-Lab and leader of the Measuring Hate Speech Project. “But I think that we need to use tools to ensure that the climate is one where no one is silenced or marginalized or, even worse, put in actual physical danger.”

‘Awful, but lawful’

The First Amendment limits the U.S. government’s ability to dictate what can and cannot be posted online, leaving it largely to social media companies to decide what types of content they will allow. Thanks to a 1996 federal statute called Section 230, social media companies also cannot be held liable for user-generated content, which means they cannot be sued for hate speech, libel or other harmful material that users post on their sites.

Brittany Hosea-Small for UC Berkeley

As a result, “I think free speech is thriving online,” said Brandie Nonnecke, director of the CITRIS Policy Lab and Our Better Web project. “We say it’s awful, but it’s lawful.”

However, Section 230 also frees these companies to moderate user-generated content as they see fit. In fact, the law was included in the 1996 Communications Decency Act to encourage companies to remove pornography and violent content from their platforms.

“Everybody tries to blame Section 230 for why we have hate speech and harmful content online. But that’s actually false,” Nonnecke said. “Section 230 actually empowers platforms to moderate content without the threat of a lawsuit.”

Social media companies may not be liable for content posted on their platforms, but they still have a moral obligation to reduce the harms caused by their technologies, Nonnecke says.

In some cases, they may have a legal obligation, as well. While the specific content posted on social media is protected speech, the platforms themselves are not. If these technologies function in ways that are harmful — for instance, if an algorithm routinely encourages dangerous behavior — this could be considered negligent product design. In this case, the companies may be held accountable in the same way that car companies are held accountable for safety defects, or the tobacco industry was held accountable for misleading the public about the dangers of cigarettes.

In a 2023 Wired article, Nonnecke and Hany Farid, a professor in the Department of Electrical Engineering and Computer Sciences and at the School of Information at Berkeley, describe a legal case in which SnapChat was forced to remove its Speed Filter, which let users record and share their velocity in real time, after it led to the death of three Wisconsin teenagers in a high-speed crash. The researchers argue that other social media companies should similarly be responsible for dangerous product design.

“The blood is on their hands,” Nonnecke said. “When it’s clear that they have built their platform in a way that incentivizes dangerous and harmful behavior, that has a real human cost, they should be liable for that.”

Measuring hatefulness

Even for platforms that are making a good faith effort to moderate user-generated content, a fundamental question remains: How do we actually define hate speech? A statement that strikes one person as innocuous might be extremely hurtful to another. And without an agreed upon definition of what counts as harmful, it is impossible to know how much hate speech is present online, much less how to address it.

The Measuring Hate Speech Project, launched by Claudia von Vacano and her team at the D-Lab in response to the 2016 presidential election, is helping to answer these questions. The project combines social science methodologies with deep learning algorithms to develop new ways to measure hate speech, while mitigating the effects of human bias.

“The Measuring Hate Speech Project has the most granular level intersectional analysis of hate speech of any project that we have encountered,” von Vacano said. “A lot of people who work in this area don’t have social science training, and so they’re not necessarily thinking about bias and identities in the nuanced ways that we do. Because we are social scientists, we’re informed by critical race theory and intersectional theory, and we are bringing all that knowledge to the work.”

Social media companies are increasingly using algorithms to detect hateful content on their platforms, but these algorithms often reflect the biases of their creators — which, in the U.S., are primarily white men and others with a high degree of social privilege. Other platforms rely on user reports to flag problematic content that can similarly favor the opinions of dominant groups.

I think it’s more useful to think about degrees of hatefulness. There is a lot of nuance between being a jerk on the internet and being genocidal.

Pratik Sachdeva

In these ways, poorly designed content moderation systems can end up harming the very communities they were meant to protect, von Vacano said.

“In [countries] where there have been laws passed to control for hate speech, we know that those laws have actually been used against the protected groups more than they have against dominant groups,” von Vacano said. “This is absolutely happening online, as well. So for example, we see that groups such as the Black Lives Matter movement are more likely to be systematically censored.”

The Measuring Hate Speech Project uses a two-pronged method for assessing hate speech. First, annotators read sample social media comments and rate each comment for hatefulness. Rather than marking comments as either “hate speech” or “not hate speech,” the annotators are asked a series of questions about each comment. These include questions about the sentiment of the comment, whether it targets a specific group or implies that one identity is superior to another, and whether it advocates for violence, among other questions.

The responses are then used to train a deep learning algorithm, which can be applied to new online content to gauge the type and degree of hatefulness that might be present.

“The Measuring Hate Speech Project posits that hate speech is not a binary phenomenon — it’s a multifaceted, complex phenomenon,” said Pratik Sachdeva, a research scientist at the D-Lab who refined the tool alongside von Vacano. “Even calling something hate speech assumes that it has reached a certain threshold, but I think it’s more useful to think about degrees of hatefulness. There is a lot of nuance between being a jerk on the internet and being genocidal.”

Given the complex and often subjective nature of hate speech, the tool also asks each annotator detailed questions about their own identity. Sachdeva has used this information to analyze how a person’s identity influences their sensitivity to different types of hate speech. In a 2023 paper, he showed that annotators were more likely to rate comments targeting their own identity group as containing elements of hate speech.

“My hope is that we can reshape how people think about content moderation, and also highlight the importance of deeply considering how we create machine learning datasets for real life applications,” Sachdeva said. “If you prioritize only people who are similar to you in shaping the data set, you’ll miss out on a whole spectrum of lived experiences, which are very important for how these algorithms operate.”

Supporting survivors

The goal of online content moderation is usually to remove any harmful content and, if appropriate, to suspend or ban the user who posted it.

But this approach ignores a key player in the interaction: The person who was harmed.

Inspired by the framework of restorative justice, Sijia Xiao wants to redirect our focus to the survivors of online harm. As a graduate student at Berkeley, she worked with Professors Niloufar Salehi and Coye Cheshire to study the needs of survivors and build tools to help address them.

“An important aspect of my research is about changing the way people think about online harm and the ways we can address it,” said Xiao, who is now a postdoctoral researcher at Carnegie Mellon University after graduating with a Ph.D. from the I School in May 2024. “It’s not just about getting an apology from the perpetrator or banning the perpetrator. There can be different forms of restorative justice and different ways that we can help survivors.”

Through interviews and co-design activities with adolescents who had experienced interpersonal harm in an online gaming community, Xiao was able to identify five needs of survivors. These include making sense of what happened, receiving emotional support, feeling safe, stopping the continuation of harm and transforming the online environment.

As part of her Ph.D. work, she created an online platform to help address these needs. The platform creates a community of survivors who can support one another and start to make sense of their experiences. It also helps survivors connect with outside resources and create action plans to help reduce the continuation of harm in their communities.

“My hope is that we can build a survivor community where people can share their experiences, validate each other and feel inspired to take action to address that harm,” Xiao said. “It could also be used as a tool for content moderators, or even perpetrators, to understand survivors’ experiences. If perpetrators are willing to see things from the survivors’ side — to understand the impact they have on others — maybe they can transform their behavior.”

While thoughtfully-designed digital tools can do a lot to improve everyone’s safety online, Nonnecke believes that truly getting to the root of the toxicity and division that plagues our country will require that we all stop scrolling and find more ways to connect face to face. Investing more in our nation’s crumbling public infrastructure — particularly spaces like parks, where people from all classes and backgrounds can gather — might be a good place to start.

“Maybe I’m becoming a Luddite, but I think we need more actual engagement with each other in the real world,” Nonnecke said. “We need to get back to basics and interact with each other so we can see we’re more alike than different.”